NEURO/ Thinking Machines, Machinic Thinkers

The field of artificial intelligence has long situated its relevance based on the two-fold aim of 1) simulating intelligence — primarily understood as a brain-centred capacity — and 2) learning more about human intelligence(/brains) along the way. In other words, the aim of AI research is bidirectional, informing both, the computer and neuro sciences.

Although I am, admittedly, a fan of critiques of locationist models of the brain, as well as those that condemn the cybernetic, computer-brain metaphor for being ‘exhausted’, there is no escaping the widespread influence of these very models — or following along with Sampson’s (2016) argument in the Huxleyesque tone: their conditioning reach. Scholarly critique aside, the brain-algorithm coupling has become both, deeply embedded and unquestioned. Perhaps as Mosco (2005) points out, new technologies gain power once they become banal. Likewise their accompanying myths are only revolutionary from the moment they cease to be questioned.

Take for instance the notion of ‘learning’. Across today’s research, education, political and corporate contexts, processes of learning concern brains and algorithms alike. This is neatly packaged and asserted in large-scale initiatives like Google’s Brain Project or IBM’s Brain Lab or Smarter Education program for example, to which the brain-algorithm coupling is a given. As Williamson suggests, future classrooms will be brain/code/spaces, an idea articulating that “environments are becoming increasingly programmable, but also how such spaces are becoming dependent upon encoded models of human cognitive functioning to become adaptive learning environments, and represents the material and spatial instantiation of imagined computational neurofutures-in-the-making.” (2017: 83-84) On these terms, the future of learning is smart; it relies on brains and algorithms learning together, and from each other.

In short, I propose that today’s brains and algorithms are co-constitutive; algorithms are unthinkable without brains, but so are brains without algorithms.

Exploring this relationship, this essay can be read as a collection of brain-algorithm co-incidences: contexts in which the two exist side by side, and inform one another. My aim is to reflect on the different ways in and through which these two concepts have come to overlap. Here, I focus on four different ‘cases’, each of which probes a different position of the brain’s role vis-a-vis algorithms.

Case I: The brain as a guiding metaphor

The brain metaphor is a starting point, which long precedes computers as such. To get a popular, and by now somewhat historical glimpse into the metaphor’s mutations, I’ve been reading books like Arbib’s (1972) ‘The Metaphorical Brain: An Introduction to Cybernetics as Artificial Intelligence and Brain Theory’ and Allman’s (1989) ‘Apprentices of Wonder: Inside the Neural Network Revolution’, which, as the titles themselves suggest, put this metaphor centre-stage in their focus. Texts like these have been formative in establishing the discursive and explanatory link between brains and algorithms outside of narrow expert circles.

The notion of a ‘brain metaphor’ that I use pretty freely, without much qualification, comes with countless nuances that I cannot do justice to here, within the scope of this text. As Arbib (1972) lays it out in his book, explanatory metaphors of human cognition build on a range of evolutionary and cybernetic principles, which have in turn inspired varying approaches to developing intelligent machines. Arbib highlights the ‘artificial intelligence approach’ and the ‘brain theory approach’; the first strives to yield “intelligent” behaviour without regard for the emulating (“intelligent”) biological structures to achieve it, while the second attributes importance to structurally similar designs. Allman similarly reiterates that some ‘study the brain’s biology; others, the mind’s behaviour’ (1989: 6). By accounting for the nuances, such texts give their readers a more grounded and profound understanding of the brain-algorithm coupling.

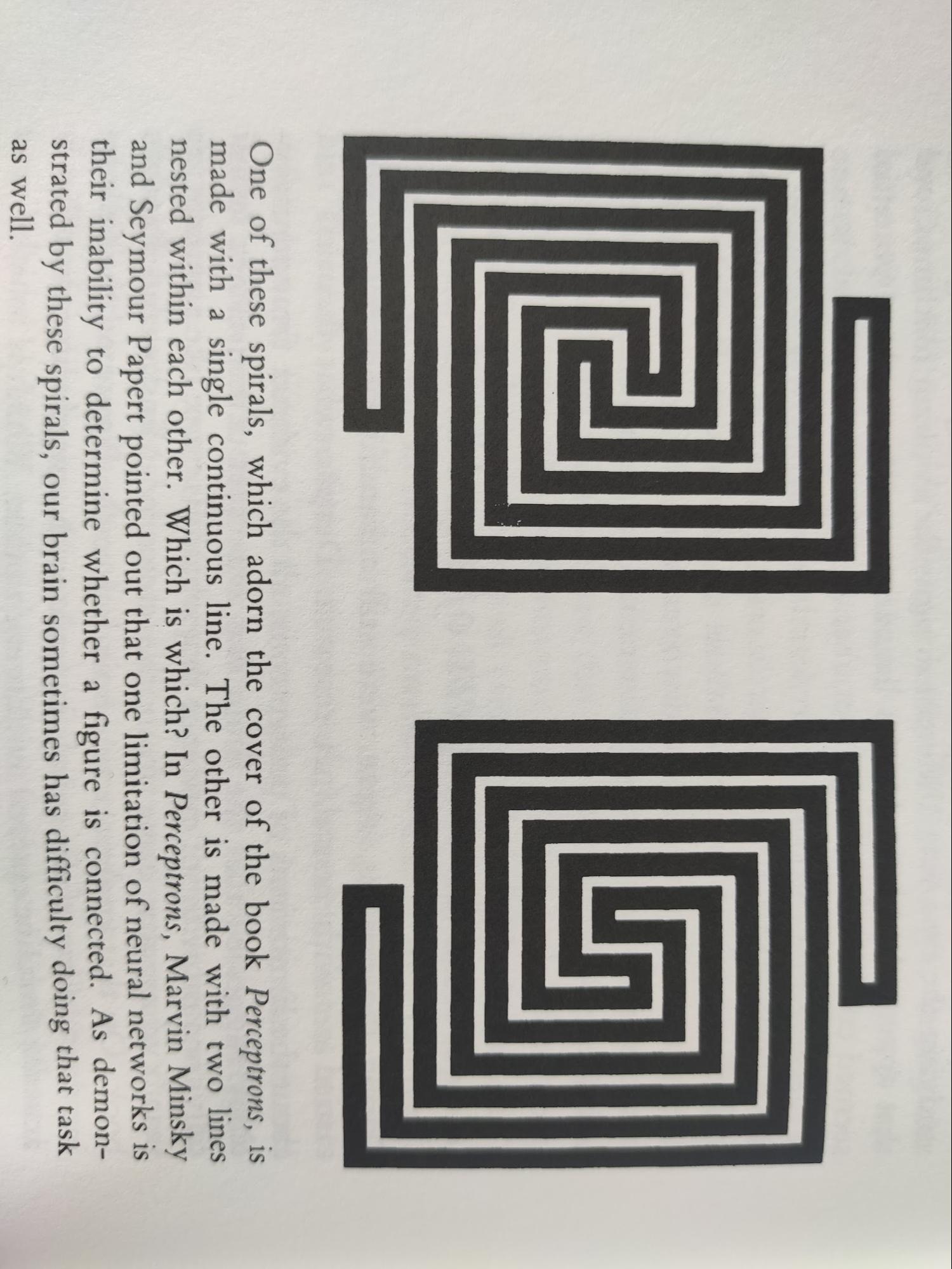

Sources like these are careful to stress the metaphorical, comparative nature of the relationship. The image above is one example in which Allman (1989) establishes shared ground between neural networks and human brains, by pointing to a task that is difficult for both. At the same though, the book abounds with qualifications that stress the differences; for example, stating that unlike the most powerful computer (remember that it’s the late 80s) ‘we can recognize our mothers right after they’ve had a haircut’ (Allman, 1989: 6). In a more general manner, Arbib was cautious to qualify his writing by stating that ‘[a] good metaphor is a rich source of hypotheses about the first system but must not be regarded as a theory of the first system’ (1972: 10). Covered from critique, this carefully qualified metaphor has shaped people’s imaginaries of computers and their software for decades.

On a more contemporary note, what makes the brain metaphor particularly interesting, is its cultural, political and economic reach. In a time characterised by phrases like neuroculture (Sampson, 2016) or brain culture (Pykett, 2015), it comes as little surprise that neural networks have become some of the most influential algorithms. The neuro- prefix sells, and neuroscientific explanations have, at the same time, become central to how we understand ourselves.

Fitzgerald and Callard (2015) describe today’s obsession with the brain across different disciplines as a shift towards neuroscientific authority. They argue that it is:

‘undeniable that many facets of human life that were, for much of the 20th century, primarily understood through the abstractions of ‘culture’ or ‘society’ – commercial and economic life, governance, historical change, identity, distress and suffering – are increasingly understood as functions of the cerebral architecture of individuals or of groups of individuals (for examples, see Adolphs, 2003; Camerer et al., 2005; Chiao, 2009; for reflections, see Rose, 2010; Vrecko, 2010; Matusall, 2012).’ (2015: 7)

In other words, neuroscience is framed as a new cultural activity that orders, and perhaps even dominates our worldview. The widespread investment in the so-called ‘metaphorical brain’ should be viewed in this context; popularity of the brain is one of the elements that carries the brain-algorithm coupling.

At the same time, the embodied dimension implied in the notion of ‘brain’ helps us make sense of these otherwise abstract and invisible/intangible machine learning algorithms. Commenting on the reversed computer-mind metaphor — influential as an explanation of human subjectivity — Sampson (2016) points out the pitfalls of this coupling in failing to allow us to understand sense making of brain-environment and brain-body encounters. He argues that elements like non-brain body parts and beyond-the-body encounters are just as important to our sense-making as the brain itself. Conversely, it can be argued that the brain-computer metaphor carries with it the association to the bodies that these metaphorical lumps of neural tissue are enmeshed in. Its embodiment therefore, makes the brain a particularly powerful guiding metaphor in understanding algorithms.

Nevertheless, it is relevant to mention that this does not mean that a parallel state of embodiment is attributed to the algorithms that the metaphor describes. In some contexts, it can even be necessary for the brain to remain no more than a ‘metaphor’, as this might begin pushing the boundary of what people find permissible amidst their worldviews. In a study on mind perception and the uncanny valley, Gray and Wegner (2012) found that people would more readily attribute brain-like qualities to an abstract piece of software than to a humanoid robot. The participants found body-fitted robots unnerving, seeing as, as Gray and Wegner speculate, the physical humanoid body prompted them to see mind - rather than simply brain-like or mind-like qualities. This suggests the metaphor was perhaps stretched too far, posing a challenging reality, rather than a likeness kept a safe distance away. That is to say, in certain contexts, the brain-algorithm coupling describes at most a metaphorical relation with two ends that are distinctly separate; considering the brain a metaphor grants a comfortable distance; we can attribute algorithms brain-like qualities while considering them mindless, without this posing any inherent contradiction

Case II: The brain in computer science textbooks

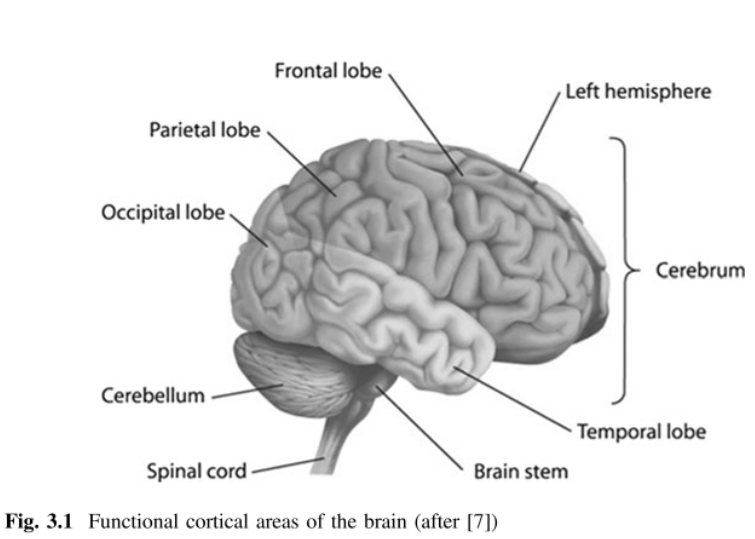

Images of brains abound in computer science (text)books in different shapes and styles; one might wonder, what would today’s AI algorithms be without brains to emulate? While early artificial neural networks claimed vague resemblance to the structure of neurons, it is interesting to see detailed anatomical diagrams or EEG scans of human brains increasingly making their way into computer science literature. This careful consideration of and attention to the (mostly human) cerebral architecture seems to stretch the relationship between brains and algorithms beyond a merely metaphorical association.

More than likeness, researchers and developers of algorithms strive to reproduce particular functions of the brain through their work. In his book on spiking neural networks and brain-inspired AI, Kasabov (2019) for instance states that ‘real brain function’ is the benchmark for the evaluation of certain algorithms in the field. Next to a successful ‘execution’ of the given function, criteria like efficiency and speed are also, of course, important points of inspiration from the brain, for algorithms; in comparison to current ANNs, ‘real biological brain functions usually happen in much faster duration.’ (Kasabov, 2019: 129)

Alongside diagrams and benchmarking against concrete functions, textbooks like Kasabov’s take care to educate their readers about a more holistic understanding of the brain’s development. Kasabov explains for example that:

‘Functional connectivity develops in parallel with structural connectivity during brain maturation where a growth-elimination process (synapses are created and eliminated) depends on gene expression and environment.’ (2019: 546)

While this is not immediately linked to a parallel algorithmic architecture in the text, it provides context from a neuroscientific perspective and figures as a proposition for future sources of inspiration . This way, scientific understanding of the brain asserts itself as a body of base guidelines for future algorithmic research. In other words, the neuroscience-computer science interdisciplinarity promotes a convergence between their subjects of research.

Similarly, in practice the application of algorithms to brain exploration and vice-versa is common; the two disciplines (I mean this in a broader sense, so as to include associated fields) fuel one another. Deep neural networks are for example used for brain-related data analysis and modelling. This concerns, but also enables the use of different types of spatio-temporal brain data, like EEG, MEG, fMRI, DTI, NIRS. These types of data enable the development of mappings, coordinate systems or even ‘brain atlases’ as Kasabov (2019) explains, building on Korbinian Brodmann’s (1868-1918) work. Continued prevalence of the cybernetic metaphor encourages the implied transferability between such brain mappings and algorithms; as I mentioned in the caption beneath the image above, Kasabov for example refers to the brain as the ‘ultimate information processing machine’ (2019: 88).

This has the two-fold impact of framing the brain in ways legible to algorithms, but also in ways well suited for emulation through algorithmic means. Indeed, as Kasabov writes, ‘[i]f trained on EEG data/knowledge when humans are performing cognitive functions, a BI-SNN can learn this knowledge and can possibly manifest it autonomously under certain conditions.’ (2019: 292) This implies that brain-based data could serve as a coordinate system for algorithms through which knowledge could be shared directly between algorithms and brains. Of course the immediate and seamless interface that this pokes at is a mere future-bound speculation, but the fact that brain data is being packaged and used in a way that is both dependent on and potentially useful to the development of algorithms demonstrates a tight-knit relationship between brains and algorithms in this domain.

Case III: The brain as a development project

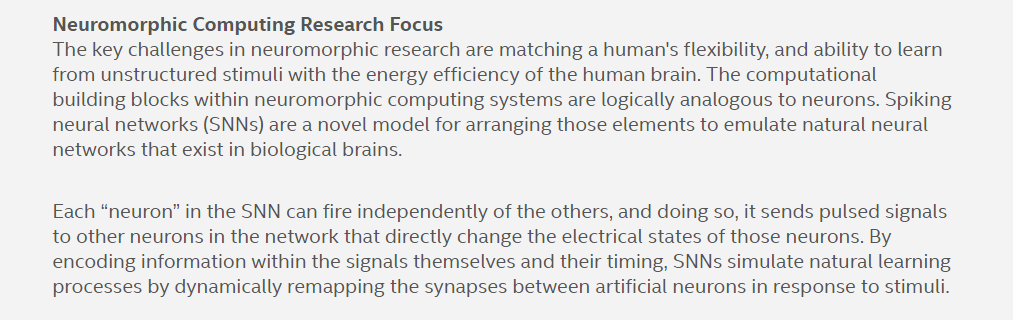

The fantasy of recreating the human brain is becoming more tangible through the infrastructure that is being built up to accommodate it. Tech companies like Google, IBM or Intel each have their own versions of Brain teams or labs that actively chip away at research, innovation and development work geared towards emulating the brain’s architecture and functions. Working teams like Intel’s Neuromorphic Computing Lab are profiled as ‘a collaborative research effort that brings together teams from academic, government, and industry organizations around the world to overcome the wide-ranging challenges facing the field’ (Intel Labs, n.d.). Although I have not found explicit statements on funding, Williamson writes that IBM’s Brain Lab had funding in the order of ‘hundreds of millions of US dollars’ (2017: 88), a large part of which was from the US Defense Advanced Research Projects Agency (DARPA). Google pitches its Brain Team as one that operates on the ‘Google Scale’ with ‘resources and access to projects impossible to find elsewhere’ (Google Brain Team, n.d.). Not to mention that the brain-inspired projects of Google, IBM and Intel all claim some affinity to ‘cutting-edge’ technology and research. The next generation of AI projected by such large-scale projects demonstrates an investment in the brain-algorithm coupling, which positions it as a possible, or perhaps even probable future — importantly, the investment behind it is both financial and ideological.

Although most of these projects do not claim to be developing a full-blown ‘artificial brain’, their approach to what constitutes their envisioned brain-inspired computers/algorithms demonstrates a convergence between the two. As I mentioned earlier, traditionally AI could be understood as being split between the ‘artificial intelligence approach’ and the ‘brain theory approach’, each of which relates to the brain metaphor differently (Arbib, 1972). Today’s neurocomputing projects combine both, the functional and structural emulation of the brain and its architecture. Intel’s Neuromorphic Computing Lab for instance, is developing ‘new algorithmic approaches to emulate the human brain’ using the corporation’s ‘self-learning neuromorphic research chip, code-named "Loihi."’ (Intel Labs, n.d.). A similar approach is echoed in IBM’s Brain Lab research, whose work on ‘cognitive computing’ involved both emulating ‘the human brain’s abilities for perception, action and cognition’ and the development of neurosynaptic chips’ that can ‘emulate the neurons and synapses in the human brain’ (IBM Research, 2014: n.p., in Williamson, 2017: 88). It seems then that the distinction between these two approaches is diminishing, implying that artificially emulated intelligence requires brain-based architectures. Perhaps this convergence between the fantasy of an ‘artificial brain’ and a ‘brain-inspired computer’ suggests a parallel convergence between the notions of brains and algorithms, as the gap between an analogue and a computerised version is narrowing.

While the brain is a source of inspiration and subject of emulation in such initiatives, its own valuation is changing in the process as well. As mentioned above, the work the brain labs and teams that I’m focusing on in this section is leading to new software and hardware innovations. At the same time though, the findings and processes that accompany these innovations shape the way we view the brain. This is particularly notable with regards to the functions of the brain that come to be viewed as admirable, as a result of the difficulty that researchers, computer scientists and engineers face in modeling them algorithmically. To Intel’s Neurocomputing Lab for example, different aspects of ‘managing uncertainty’ are some of the key design and engineering challenges. They explain that:

“The fundamental uncertainty and noise that are modulated into natural data are a key challenge for the advancement of AI. Algorithms must become adept at tasks based on natural data, which humans manage intuitively but computer systems have difficulty with.” (Intel Labs, n.d.)

Where other brain functions like carrying out mathematical operations or ‘pattern recognition’ have been partially or fully matched (or, in some cases, even superseded) by algorithms, issues like managing uncertainty or filtering noise continue to prove technologically challenging to emulate. As a result, these are also the functions or capacities of the brain that come to be recognised as unique, valued and celebrated. This means that similar ‘brain as a development project’ initiatives, as I’ve termed them here, are not only a way to learn new things about the brain/intelligence (in line with the two-fold aim of AI research), but also a source of new values for brains themselves. This new valuation is based on algorithmic terms.

Case IV: The brain understood through machine learning

Algorithmic processes and functions are becoming valid sources of inspiration for the brain itself. This goes hand in hand with the convergence between fields like neuroscience and computer science that I mentioned previously. This means that the ‘validation’ that I am referring to in this section should also be understood in the context of academic grounds.

This shift towards a state of mutual inspiration builds on the cybernetic metaphor applied to phenomena like ‘intelligence’ or ‘cognition’. As I have discussed throughout the previous sections, the brain has been a continuous source of inspiration for computational systems. Research in this domain is increasingly portraying the two as analogues. A recent paper reviewing the ways in which infant learning can inspire machine learning for example, describes human infants as a ‘a close counterpart to a computational system learning in an unsupervised manner as infants too must learn useful representations from unlabelled data’ (Zaadnoordijk, Besold & Cusack, 2020: 2). Here, the infant and algorithmic system are portrayed as ‘counterparts’ that face the same challenges in the world. Further on in the paper, Zaadnoordijk, Besold and Cusack go on to state that ‘[n]ot all inductive biases have been made equal. Infants do not have to start their developmental trajectory from a complete tabula rasa or from an arbitrary starting setup - and neither should neural networks.” (2020: 3) This concluding remark is obviously intentionally phrased in a somewhat provocative, cheeky manner, but nonetheless evokes a feeling of conditions that neural networks ought to be provided with — theyshould not have to start from a ‘tabula rasa’. Stated in contrast to the infants’ ‘starting setups’ — their homes, their caretakers etc. — this perhaps brushes against loaded domains like rights that two counterparts should share.

One step further in establishing this bi-directionality of mutual inspiration in the brain-algorithm coupling, we find inferences from algorithmic systems in domains like cognitive sciences themselves. I was struck by this when reading a paper by Raz and Saxe (2020) on infants’ endogenous learning motivation, an extract of which is included as an image above (highlighting is my own). Here, machine learning is used as an analogy for explaining infant learning; the article suggests that machine learning techniques like ‘targeted sampling’ might underlie the ways in which human infants learn. This not only further extends the shared jargon between the infant’s and machine’s learning brains — both practice ‘sampling’, both benefit from ‘generalization and data-efficiency’, both are ‘self-generating’ their curricula — but also validates algorithmic conceptions of the human brain. Through such speculations and analyses, brains and algorithms become equals, in the sense that it is no longer merely the latter that is being modelled on and inspired by the former; instead they come to inform one another.

A more-than-metaphorical coupling

Beyond the first ‘metaphorical’ case, the brain-algorithm co-incidences that I have discussed here all step outside of the boundaries that Arbib described, namely that ‘[a] good metaphor is a rich source of hypotheses about the first system but must not be regarded as a theory of the first system’ (1972: 10). The brain, featuring as the ‘first system’ here, is increasingly being understood through algorithmic means and valued on algorithmic terms. Brains and algorithms can be viewed as co-constitutive concepts.

Building on the discursive exercise, which I have limited myself to within this text, I find it relevant to point to the reasons why this coupling is an important one to trace and follow. As numerous scholars with a critical perspective on today’s state of ‘neuroscientific authority’ point out, the ways in which the brain is theorised has potentially far-reaching socio-political implications (for example: Pickersgill, 2013; Hayles, 2014; Rose & Abi-Rached, 2014; Fitzgerald & Callard, 2015; Sampson, 2016; Williamson, 2017). One frequently discussed example that illustrates this is the neuroscientifically backed notion of the brain’s plasticity. Briefly put, according to scholars like Malabou (2009) or Sampson (2016) neuroplasticity has been readily readapted to a form of ‘flexibility ripe for exploitation by market forces’ (Sampson, 2016: 136); this manifests itself in the form of, for instance, flexible work or zero-hour contracts, where being flexible and endlessly adaptive is a rewarded (and required) virtue at the workplace. Examples like these point to the impact that brain-related research and innovation can carry with it.

Such perspectives often depart, broadly speaking, from a criticism of the field of neuroscience. As I have argued here, the intersection of neuroscience with computer science is a crucial dimension in the influence that both fields can leverage. Indeed speaking for the computer science side, researchers like Beer (2017) make clear that the social power of algorithms shapes countless aspects of our social and mental lives, many of which are, today, understood algorithmically. For this reason, I find it particularly relevant to explore the brain-algorithm relationship further; not only are the two concepts co-constitutive, but they have also both established themselves as authoritative and powerful. In the long run, this coupling is likely to impact our subjectivity and daily life — or, as Williamson (2017) argues, future ‘brain/code/spaces’ may offer opportunities to ‘rewire’ human cognition.

References

- Allman, W. F. (1989). Apprentices of wonder: Inside the neural network revolution. Bantam Books, Inc.

- Arbib, M. A. (1975). The Metaphorical Brain. An Introduction to Cybernetic as Artificial Intelligence and Brain Theory. John Wiley & Sons, Inc.

- Beer, D. (2017). ‘The social power of algorithms’, Information, Communication & Society, 20(1): 1-13, DOI: 10.1080/1369118X.2016.1216147

- Fitzgerald, D., & Callard, F. (2015). Social science and neuroscience beyond interdisciplinarity: Experimental entanglements. Theory, Culture & Society, 32(1), 3-32.

- Gray, K., & Wegner, D. M. (2012). Feeling robots and human zombies: Mind perception and the uncanny valley. Cognition, 125(1), 125-130.

- Google Research. (n.d.) Google Brain Team. Google. Retrieved on 3 Oct 2020 from: https://research.google/teams/brain/.

- Hayles, N. K. (2014). Cognition everywhere: The rise of the cognitive nonconscious and the costs of consciousness. New Literary History, 45(2), 199-220.

- Intel Labs. (n.d.). Neuromorphic Computing: Beyond Today’s AI. Intel. Retrieved on 3 Oct 2020 from: https://www.intel.com/content/www/us/en/research/neuromorphic-computing.html.

- Kasabov, N. K. (2019). Time-space, spiking neural networks and brain-inspired artificial intelligence. Heidelberg: Springer.

- Malabou, C. (2009). What should we do with our brain?. Fordham Univ Press.

- Pickersgill, M. (2013). The social life of the brain: Neuroscience in society. Current Sociology, 61(3), 322-340.

- Pykett, J. (2015). Brain culture: Shaping policy through neuroscience. Policy Press.

- Raz, G., & Saxe, R. (2020). ‘Learning in Infancy is Active, Endogenously Motivated, and Depends on Prefrontal Cortices’. PsyArXiv Preprints.

- Rose, N., & Abi-Rached, J. (2014). Governing through the brain: Neuropolitics, neuroscience and subjectivity. The Cambridge Journal of Anthropology, 32(1), 3-23.

- Sampson, T. D. (2016). The assemblage brain: Sense making in neuroculture. University of Minnesota Press.

- Williamson, B. (2017). ‘Computing brains: learning algorithms and neurocomputation in the smart city’. Information, Communication & Society, 20(1), 81-99.

- Zaadnoordijk, L., Besold, T. R., & Cusack, R. (2020). ‘The Next Big Thing (s) in Unsupervised Machine Learning: Five Lessons from Infant Learning’. arXiv preprint arXiv:2009.08497.